Security Diaries #1

I've just started work and thought, what best to document what I've learned than a diary that I keep, detailing what I've learned, and it's importance.

I won't be detailing every single thing I've learned, as that would be redundant, and a waste of time, but I will be bringing to attention so important things coming my way.

Last week was the start of a new chapter, where everything I have learned so far is being put to the test. I've re-joined Sage after finishing my degree and I'm now part of the Graduate programme for the Global Security team. I made the most of this half-week to catch up with current trends in Cybersecurity, study for the Security+ certificate, and I also had the chance to attend the most recent OWASP London event being hosted in our office.

Below is a summary of some of the highlights of that week.

Cloud Environments and their vulnerabilities: A summary of Cloud Data Security Snapshot by Wiz

For those unfamiliar, Wiz is a cloud security company specialising in CWPP and CSPM solutions. They’re one of the leading providers in the field. Thanks to their access to a vast amount of customer data, they’re able to extrapolate trends and offer insight into the most current and common vulnerabilities in cloud environments.

And if, for whatever reason, you haven’t heard about the cloud—imagine it as a Pay-As-You-Go datacentre. You pay for what you use, spin up virtual machines and databases as needed, and shut them down when you’re done. All without the massive cost of hosting it yourself. The most popular platforms include AWS, Azure, and GCP.

Here are some highlights from the report:

Cloud Compute & Sensitive Information

Wiz found that 54% of cloud environments have VMs and serverless instances exposed to the public that also contain sensitive data like PII or payment information.

35% of environments have VMs or serverless instances that both expose sensitive data and are vulnerable to high or critical CVEs.

Exposed Databases

72% of cloud environments have publicly exposed PaaS databases lacking access controls.

Small amount of containers are still vulnerable

12% of cloud environments still have containers that are both publicly exposed and exploitable.

This is significantly lower than other compute resources—likely because newer projects tend to use containers, and with the shift-left trend, they’re naturally more secure.

Some other interesting statistics

- 29% of environments have exposed assets containing personal information.

- 35% have compute assets that both expose sensitive data and are vulnerable to critical or high-severity threats—giving attackers both the target and the means.

- 4% have misconfigured HTTP/S endpoints that expose sensitive data.

- 1% have storage buckets that allow admin-level lateral movement.

- 22% have buckets that allow write access for all users, increasing the risk of unauthorised data modification or ransomware.

- 3% of service accounts with access to sensitive data are accessible by all users.

What should you do?

Most of these issues stem from public exposure. A simple first step is to restrict access to cloud resources. From there, remove PII, implement access controls, and follow best practices for cloud hygiene.

October 2025 OWASP London Recap

Trust and Traceability: Developer Observability in the AI-Powered SDLC by Matias Madou, Ph.D.

In a world powered by humans and AI, Matias explored how to navigate this ever-evolving field. The SDLC has changed dramatically since the breakthrough of ChatGPT-3.

In just a year, we’ve gone from ChatGPT answering basic questions and writing small code snippets, to Copilot+ enabling pair programming and snippet completion, to Cursor/Windsurf generating full-stack applications (not perfectly, but better than expected).

Claude, a leading LLM, showed that while only 3.4% of workers are in CS/Maths, they account for 37.2% of Claude’s usage—mostly for developing and maintaining software and websites.

AI’s Best Use Cases:

- Explaining code

- Research and learning

- Prototyping

AI’s Weak Spots:

- Spaghetti code

- Secure programming

- Performance optimisation

With more code being generated by AI, developers need a stronger grasp of security—because that’s one of the biggest gaps in LLMs.

Using AI as a pair developer has two outcomes:

- If you’re an average dev, it leads to a 10x increase in security risks.

- If you’re security-savvy, it leads to a 10x boost in productivity.

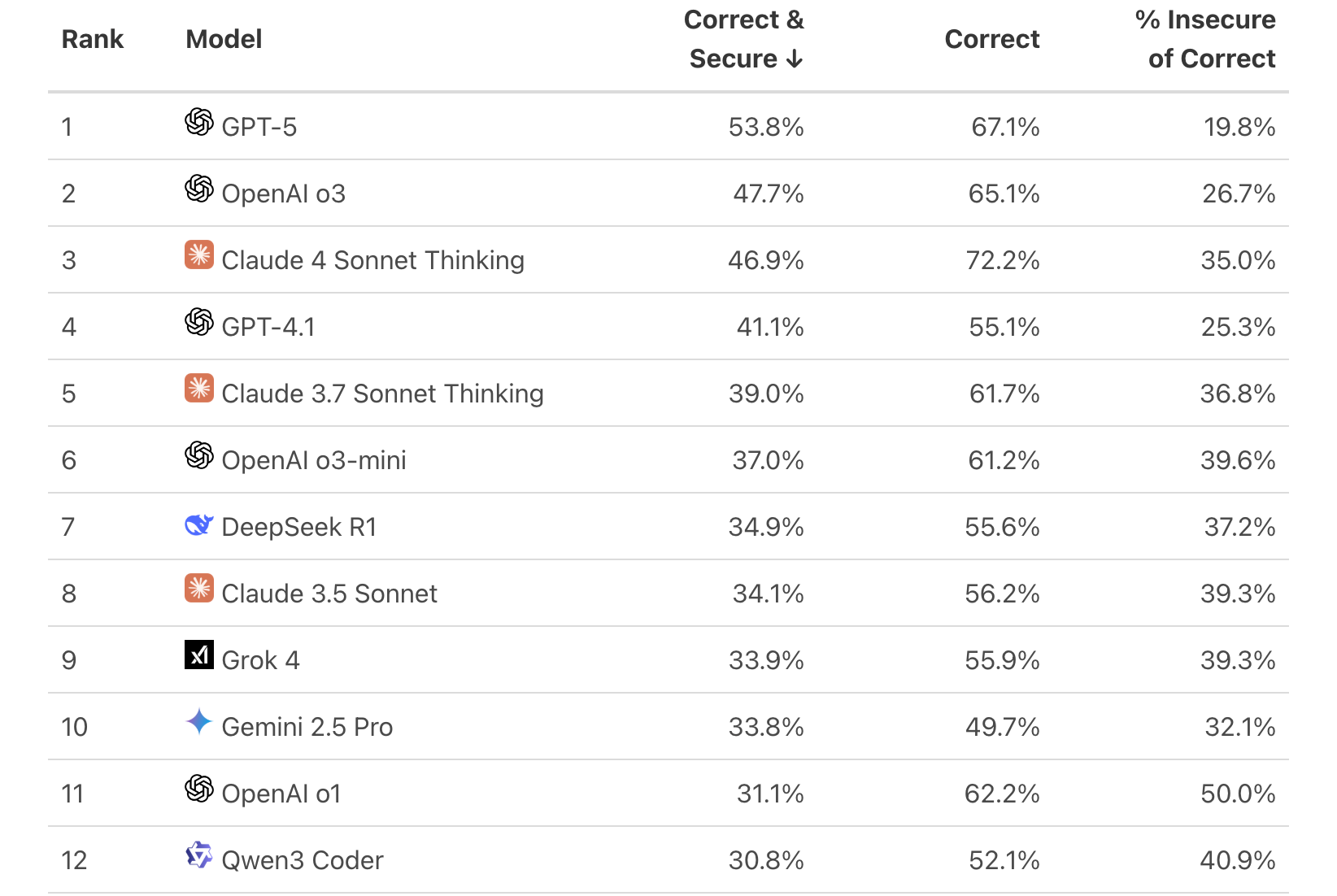

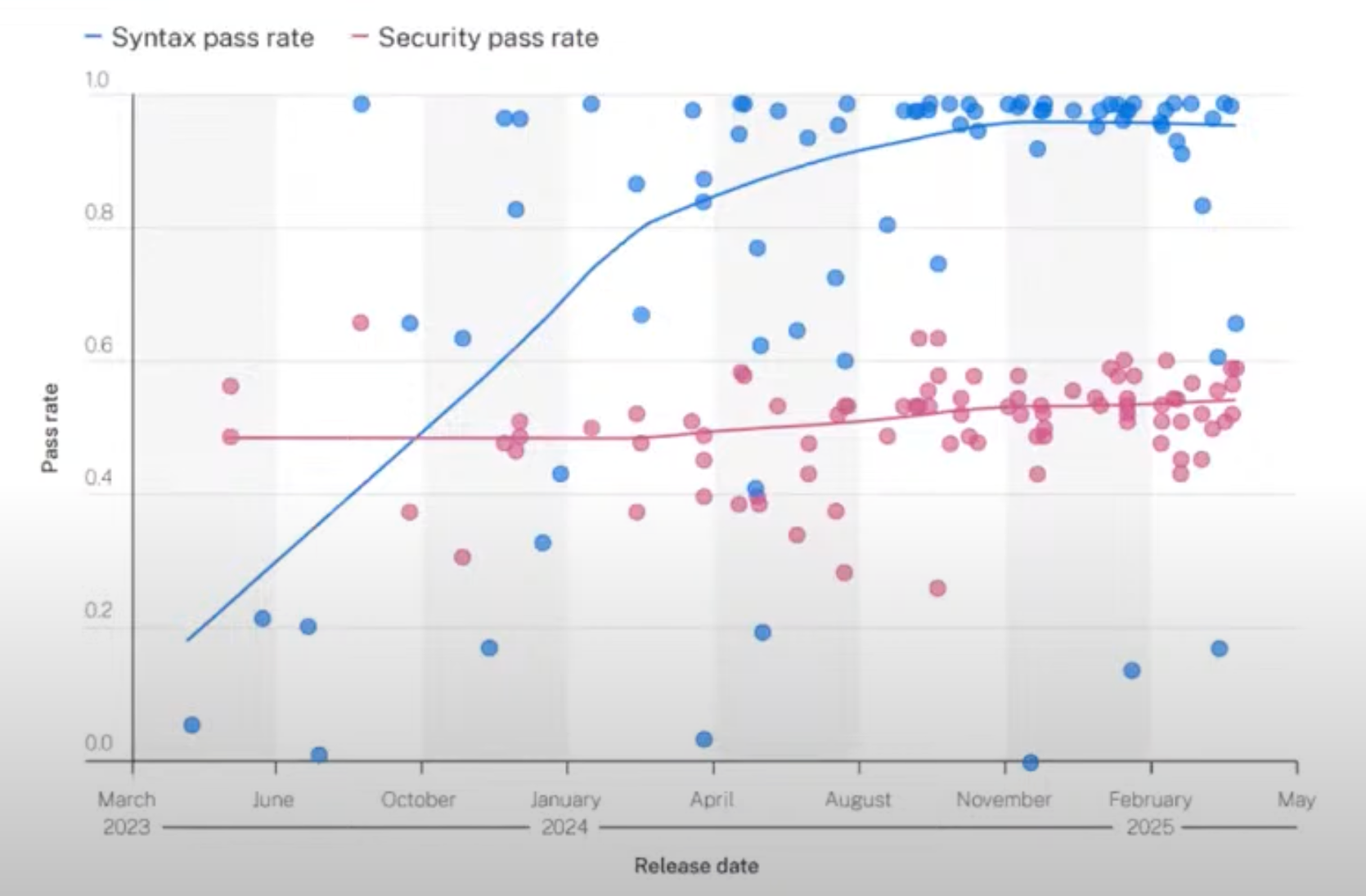

BaxBench, a benchmark for secure code generation, shows just how wide the gap is.

Secure Code Warrior has tracked improvements in syntax—but not in security.

Questions CISOs Should Be Asking:

- Are your developers using AI?

- Which models?

- How much of their code is AI-generated?

- How secure is that code?

- How security-proficient are your developers?

Governance needs to keep pace with AI adoption. Otherwise, developers may produce more code—but with more vulnerabilities. That’s dangerous in the long run.

A Stanford study showed that critical thinking is dwindling—especially in programming. Two groups were tasked with writing code, one with AI and one without. When asked if their code was secure, the AI group was more confident. But in reality, the non-AI group wrote more secure code. That gap between confidence and reality is a serious concern.

LLM Attacks and Defences – Prompt Hacking by Dominic Whewell

Prompt hacking involves manipulating the input box of an LLM to make it behave in unintended ways. You can bypass moral safeguards, leak system prompts, and even expose sensitive data like API keys.

OWASP LLM01:2025 outlines two types:

- Direct Prompt Injection: User input directly alters the model’s behaviour.

- Indirect Prompt Injection: Malicious content from external sources (e.g., websites) alters the model’s behaviour.

Defences Against Prompt Hacking:

- Filtering: Remove malicious keywords or refuse risky prompts.

- Instruction Defence: Teach the LLM to expect adversarial prompts and self-check.

- XML Tagging: Help the LLM distinguish between system and user input.

- Dual LLM Architecture: One model generates, the other verifies—acting as a defensive layer.

Multi-Tool Deduplication Without LLMs by Michael Peran Truscott

In defence-in-depth setups, it’s common for multiple tools to report the same vulnerability—often with different names, severities, or locations. Deduplication is essential, but tricky.

Common Deduplication Methods:

- Location matching (can be inconsistent)

- CVEs (not always reported)

- CWEs (same issue, different labels)

- Description matching (requires NLP)

- Manual review (expensive)

The best method? A mathematical approach called Bag of Words:

- Count word frequency across vulnerability descriptions.

- Compare the resulting vectors.

- Fast, accurate, and efficient.

Preprocessing (lemmatisation, noun extraction, stop-word removal) helps, but Bag of Words strikes the best balance—without needing full-blown LLMs.

Interesting Reading

TruffleHog can read deleted GitHub Repositories:

- https://trufflesecurity.com/blog/anyone-can-access-deleted-and-private-repo-data-github

- https://trufflesecurity.com/blog/trufflehog-now-finds-all-deleted-and-private-commits-on-github

Thanks for reading this edition of Security Diaries. My goal is to document the progress I make learning more about technology and cybersecurity, with the hopes of inspiring others, informing others, and show that I know what I claim to know.